Site Update: Welcome to the Grid!

TL;DR — Some details around the recent addition of a scrolling background grid to the site.

2 minutes

Recently, I decided to try my hand at some CSS shenanigans, and spent a few hours replacing this site’s long-serving background image with a scrolling grid background.

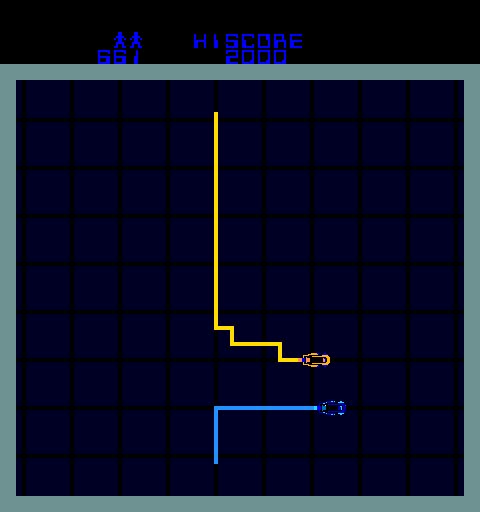

I’ve been obsessed with digital grids ever since I first saw the movie Tron (presumably during its initial HBO release, when I was around 6). Tron and Flynn were some of my first heroes (they fought for the users), and I remember being blown away not just by the movie, but by being able to play the same game they play in the movie via the incredible Tron arcade cabinet. I distinctly remember going to the Chuck E. Cheese’s near our house and playing it, complete with the special blue joystick, and just like the movie, it was amazing. And it was all grids.

So that’s the “why”, as for the “how”…

The capabilities of #web rendering engines (AKA #browsers ) have improved immensely over the last few years, particularly in the area of CSS effects. A link to a link to a link lead me to a couple of stack exchange questions and a collection of fantastic synthwave-inspired CSS effects. The next thing I know, I’ve replaced the site’s static background image with a scrolling one.

Except… I know that not everyone likes moving background effects, so the only responsible way to add an effect like that is with a toggle that allows the site visitor to turn it on and off at will. And the only responsible way to add an interactive toggle like that is via progressive enhancement: visitors without JS enabled (or those whose browsers don’t support <script type="modules">) will get just a static background grid, but those who do have JS get both the scrolling grid AND the toggle, tying the presence of the feature to the ability to disable it.

If you want to know more specifics, check out the commit on my self-hosted git server, in particular the changes to the scripts.js and styles.css files.

Because I, too, fight for the users.

Update: 2024-04-27

Since posting this, I’ve come to realize that the CSS-based grid solution I described above had some problems - most notably, the scrolling-performance hit, particularly on long pages. The simple presence of the affect was causing clipping issues and serious scroll-lag all over the site (really, any page longer than one vertical screen). Plus, the animations themselves had a tendency to get super-janky the longer they went.

Not as “user”-empowering as I’d hoped. 😦

In the end, I wound up trading out my pure CSS solution for an implementation that uses a tiny SVG file, as it is much more performant, while still allowing for color customization (I wonder why… 🤔). It’s a little bit “flashy”, but it’s still a decided improvement.